Introduction

For the past year, FreeAgent has been running a machine learning model in production that categorises customer bank transactions. This model takes transaction descriptions and transaction amounts as inputs, and attempts to predict the corresponding accounting category.

This summer, I joined the data science team with the more specific goal of increasing model generalisation, which would allow it to make predictions for a larger fraction of incoming transactions. One possible way to achieve this generalisation goal is through improving the pre-processing pipeline by integrating named entity recognition before transactions are predicted.

Named entities in bank transactions

To understand the concept of improving model generalisation through named entity recognition, consider the following two example bank transactions:

- ‘£5.00 Costa Store 123 London UK via Paypal’

- ‘£7.00 Costa Store 456 Birmingham UK via Paypal’

To the human eye it looks like our model might classify both transactions as ‘accommodation and meals’. But our model doesn’t necessarily know that “Costa Store 123” and “Costa Store 456” are both the same organisation or that “London UK” and “Birmingham UK” are just geographic locations. Such a variety of words may cause the model to have a hard time grouping these transactions together and will increase the vocabulary of terms the model needs to consider (the unique words that make up the input transaction descriptions).

So as you can imagine, transaction descriptions will vary greatly depending on the category they lie within. This variance can sometimes be extremely useful. For example, a transaction labeled as ‘wages’ may always contain the word ‘wages’ within the description, which is a rather strong giveaway!

Yet sometimes variance can become a tricky barrier to overcome – when a model comes across data that is useless on its own but useful within a description. Another good example of this would be a person’s name. Including several hundred thousand unique names whilst training a model may not help determine the difference between transactions and would only increase the model’s vocabulary.

Recognising named entities with AWS Comprehend

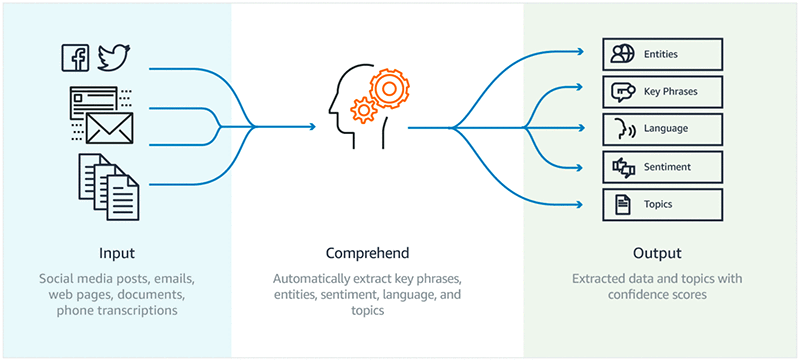

The AWS service Comprehend offers a variety of natural language processing (NLP) tasks, available both through the console and a set of APIs. These NLP tasks allow the user to obtain information about the input text such as entities, key phrases, language used, sentiment, syntax and personally identifiable information.

A concrete example of running Comprehend named entity recognition on transaction-like text is given below. AWS provides a handy guide to get this example Comprehend analysis working yourself.

Input – ‘Harry Tullett on 21/01/21 tesco store 1234 via mobile fpt 123456789n Edinburgh UK’

We can see that Comprehend successfully identifies groups of word tokens into entities such as “Harry Tullett” and “Edinburgh UK”.

One way to enrich the dataset is to call Comprehend as part of the pre-processing pipeline, resulting in the model being trained with descriptions that have named entities substituted. When calling Comprehend over the transaction description dataset, it returns a variety of entities and their predicted types within a JSON response of a similar structure as shown in the image below:

These types consisted of Date, Quantity, Organisation, Location, Person, Title, Commercial Item, Event and Other. Now all that was required was to take this JSON response, and whilst passing bank transactions into the model during pre-processing, replacing the identified entities with their corresponding token names.

Results of using Comprehend

After experimenting with different combinations of entity replacement, I found the best performance improvement for our categorisation task was with using the following set: Date, Quantity, Location, Title, Person. When using this tokenized data, the model produced a reduction in vocabulary size of 20% and an overall accuracy improvement of 3%, which, considering we had seemingly pushed the model to its limits already, was a surprisingly good improvement!

It was really interesting to see an improvement in overall accuracy when entity replacement was included in the pre-processing. However, there was now the issue of balancing the scales between obtaining this 3% improvement against the expenses incurred from incorporating Comprehend into the production model infrastructure – these expenses being the added processing time and the actual cost of the service. This decision ended up motivating me to explore simpler approaches to recognising and replacing transaction tokens; essentially attempting to imitate Comprehend.

Imitating Comprehend

To help reduce costs while maintaining as many of the benefits of entity recognition as possible, I have attempted to create a simpler approach to entity detection and replacement within the pre-processing pipeline in the form of a regex catcher. I have used the results and knowledge that Comprehend has produced in an attempt to produce a regex replacement class which acts as a simpler Comprehend.

Based on the test using Comprehend, I know that the most efficient entities are quantity, dates, titles, locations and persons. With regex replacement, the quantity and dates entities took the least effort, thus were first on the to-do list. By including this regex replacement within the pre-processing pipeline, the gap between the model’s general accuracy before and after Comprehend was shrunk from 3% to 1.5%.

Concluding thoughts

Although in the end we decided not to use Comprehend, this research has been a brilliant example of why you should always test out additional machine learning techniques. Comprehend has ended up being a useful tool for determining the more important entities to replace and allowed us to focus on these entities ourselves, which removed a lot of the experimentation hassle that would have been required to find this out.